Airgap vs. Online: Deployment Targets

When deploying to an on-prem environment, it is important to understand the differences between target environments. Depending on an end customer’s security needs, there is a broad spectrum of potential environments ranging from internet-connected cloud VPCs to fully-airgapped datacenters with no outbound connectivity.

Environment Spectrum

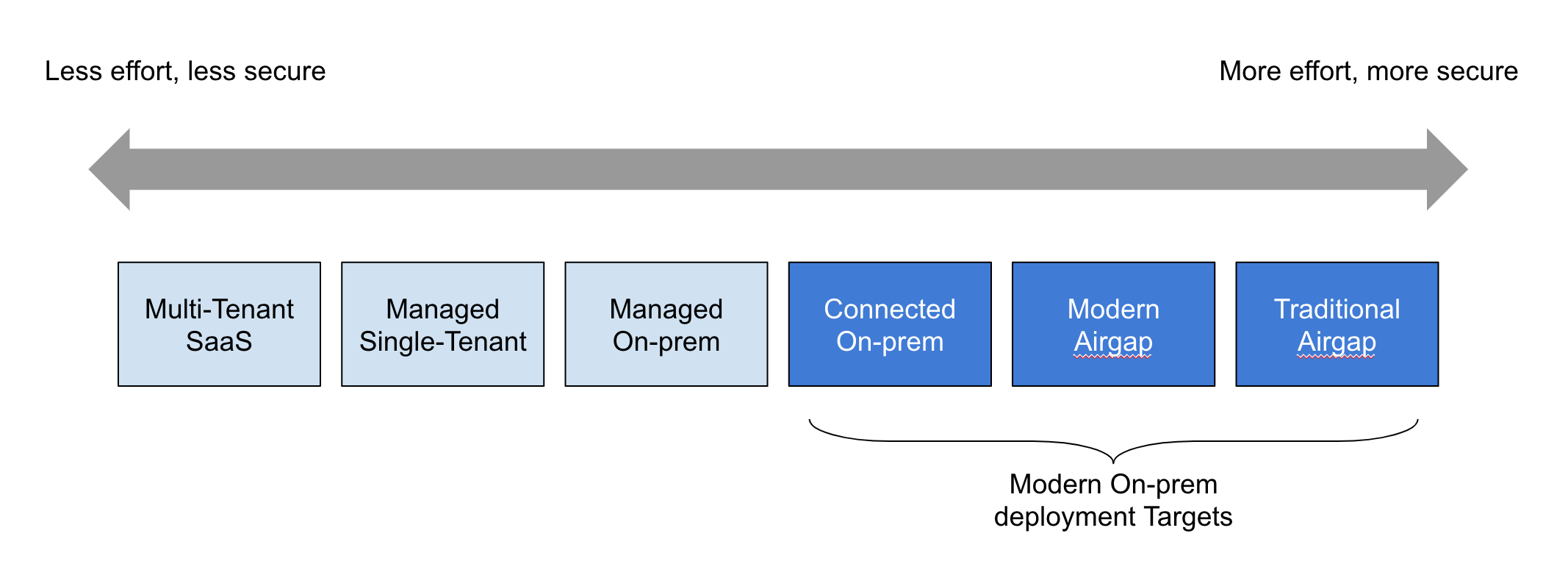

After exploring Modern On-prem delivery across a broad spectrum of vendors and organizations, we’ve found that these deployment models fall into a few broad categories. In this article we’ll explore the differences between several environments. Online Environments, Modern Airgapped Environments, and Traditional Airgapped Environments are shown on the spectrum below. For completeness, we’ll also include Multi-tenant SaaS, managed single-tenant applications, and managed on-prem in this spectrum, even though we do not consider these to meet the minimum requirements for Modern On-prem.

Online Environments

The easiest deployment target by far is an environment where the infrastructure has access an outbound internet connection. While no application data leave the end user’s infrastructure, instances have access to download application artifacts and updates directly. Consequences of this model include:

- Updates are easy, single-click process can be built for end users to install new versions of an application

- Uploading support and diagnostics information is easy

- With customer consent, vendors can even send anonymized machine data to a third party service like Datadog or Statsd. The line is blurry here, which is why it’s important to ensure customers are given the opportunity to opt in or out of such monitoring.

In general, while internet-connected environment are the easiest to bootstrap, they also requires the most trust, as online environments are vulnerable to direct data exfiltration if the application is compromised.

Modern Airgap

For more sensitive workloads, end users might require a vendor to deploy an application into an airgapped environment. In most cases, this describes a virtual or physical datacenter without any ability to make outbound internet connections. This means the application will be unable to directly download application assets or provide an application maintainer with valuable telemetry information.

Both modern and traditional airgapped dataceneters often draw a separation between internet-connected environments–sometimes referred to as DMZs–and more secure environments where sensitive production workloads may be run. For example, it may be possible to download new artifacts in a Staging or Corp VPC, but in order to run those artifacts in production, they must be moved to an environment without outbound network access, usually via VPN, IPSec peering, or dedicated network lines.

In a “Modern Airgap” environment, this is often a fairly modern datacenter environment in AWS, GCP, Azure, or another cloud provider. The “airgap” is achieved by configuring networking so that servers in production or other sensitive environments have no route to the public internet.

We usually deliver 2019 Airgap, which is essentially an AWS VPC without an internet gateway.

— Evan Phoenix How Hashicorp delivers On-prem with Replicated

To deploy an application into a modern or traditional airgap environment, it is critical to simulate such an environment internally so extensive testing of the application can be performed. It can also be valuable to implement tools that can monitor the outbound connections an application makes. End users with such requirements will often run similar tests to verify the application in a lab environment before deploying it to production.

Traditional Airgap

When maximum security measures must be taken due to data risk concerns, end users may require applications to be run in a datacenter that is completely disconnected from the outside world. In modern airgap scenarios it may be possible to download application assets in an internet-connected DMZ and to copy them in via a secure network tunnel. Conversely, traditional airgap defines any scenario where assets must be copied to physical media for transport into a datacenter. These scenarios are often referred to as “sneakernet”, because the only way to get bits into (or out of) of a production environment is by walking into a DVD or USB drive into a physical datacenter.

Of the different deployment models, traditional airgap presents the largest challenge for on-prem delivery. Because lengthy manual processes are required for updates, end users are likely to retrieve and apply updates far less frequently than in modern airgap environments, where update delivery can be automated to some extent. Further, when things go wrong, recovering diagnostic information from an application will be slow if not impossible, meaning troubleshooting must be done in a fully asynchronous and disconnected fashion.

Even though we’ll often refer to these environments as “traditional” or “sneakernet” environments, it’s important to note that it’s still possible to deliver Modern On-prem applications to these environemnts. Furthermore, nothing in the definition of Modern On-prem or the Kubernetes-based implementation presented here precludes and end customer from implementing the best practices of Modern On-prem in such an environment.

Aside: Deployment Targets and Kubernetes Market Bifurcation

While most of this guide assumes a Kubernetes-based implementation of Modern On-prem, vendors will also need to consider the case where a potential consumer of a Modern On-prem application may not have fully adopted Kubernetes for production workloads. This is often referred to as the bifurcated Kubernetes market.

In this case, it can be tempting to fork a SaaS application to deliver a more minimal, VM-focused approach like an OVA. The difficulties of a forked delivery model are discussed in depth in Release Management, but it bears repeating here. Rather than learning and implementing multiple schedulers or delivery models, we usually find the best way to deliver a Kubernetes-based application to an on-prem environment is to include a Kubernetes installer as part of the application package.

The decision to bundle Kubernetes with an application represents taking on significant set-up and maintenance costs across support and engineering organizations, and is not one to be made lightly. When choosing a Kubernetes distribution, it’s important to consider:

- Proximity to upstream kubernetes

- Depth and breadth of built-in features: does a given distro require making additional decisions about Ingress, Networking, etc? To what extent can these add-ons be customized?

- Ease of patching and upgrading the cluster and its runtime add-ons

- Support for High Availability clusters, effort required to configure an HA cluster

- Ability to purchase support for the distribution

- History of addressing Kubernetes CVEs in a timely manner

Takeaways

With all this in mind, there are a number of factors that can help minimize the amount of overhead while ensuring successful delivery of an application to security-conscious customers.

- Find a distribution that is opinionated but pluggable. For example, kurl.sh ships with Heptio Contour as the default ingress controller, but allows for swapping in an alternative provider like nginx-ingress. The same goes for other add-ons providing networking, storage, and more.

- Choose a Kubernetes distribution that is as close as possible to upstream kubernetes. Minimize the number of steps between the chosen distribution and the core, community-supported Kubernetes components. Depending on requirements, it might be prudent to avoid proprietary or closed-source distributions when embedding Kubernetes with an application.

- Consider whether an app will require additional support for installing and maintaining Kubernetes in an end customer’s environment. This depends not only on an engineering team’s expertise with bootstrapping, maintaining, and scaling Kubernetes, but also on the amount of engineering bandwidth they’re willing to spend on this. In many cases, it might be valuable to engage a third party to assist here. Choice of Kubernetes distribution might affect what support options are available.

While it represents a big decision, embedding Kubernetes can be a pragmatic way to move upmarket and start exploring Modern On-prem without needed to wait for end users to adopt it. Further, as enterprises do adopt Kubernetes, Kubernetes installers and distributions will likely become commoditized. This will relieve vendor teams of the need to configure and maintain an on-prem cluster to run a Modern On-prem application. Whether a vendor provides the cluster or an end user, leveraging Kubernetes is still a highly reliable way to automate the lifecycle and maintenance of an On-prem application.