Securing App Access: BeyondCorp

At Replicated, we believe that the BeyondCorp concepts should be widely adopted and used by everyone. We have a strong preference for running our own instances of applications that touch customer data, and we have a strong preference for running these applications in Kubernetes. Recently, we’ve been reviewing the developer tools and pipeline that we use to ship our code into production. Over time, we’ve added some specialty tools and wanted to take some time to review everything in the process and to bring it all into our own cluster (using Replicated Kots). Keeping the system secure while not sacrificing developer efficiency is important, so this was a good time to implement BeyondCorp authentication and security model on the developer pipeline.

Trusted Vendors

We are a startup, and slightly more limited resources than Google. So we set out to implement our access control and authentication using off-the-shelf components and services, but still provide the same ease-of-use and security that BeyondCorp promises. Most of the pieces were already in place including a single identity provider (Okta), strong two factor security (Duo), and hardware keys (Yubikey) assigned to every engineer on the team.

To build this, we decided to make a list of vendors we will trust to host and run services, and will default to everything else running in our VPC. This decision will constantly be evaluated as we implement additional tools, but for now, our list of trusted vendors is short:

-

Google Cloud to host our cluster and instances

-

Okta for authentication

-

Duo for two factor and endpoint security

-

Cloudflare to provide the connectivity to every workstation.

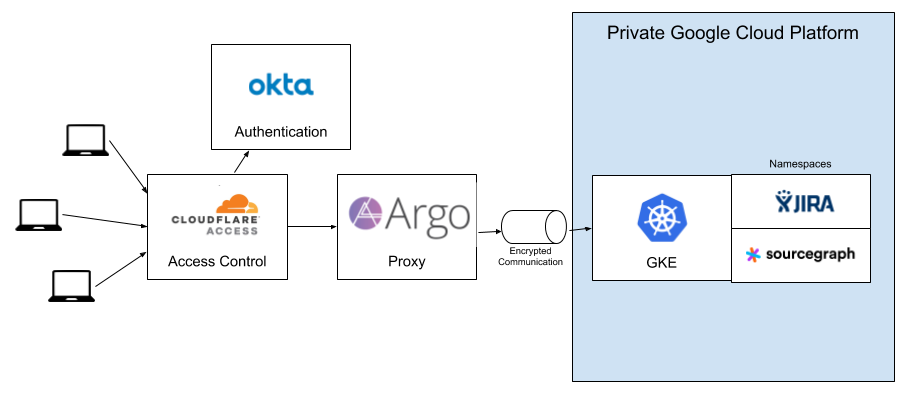

Our Developer Network

It works like this: We’ve used Terraform to provision a GKE cluster running with preemptible instances and an auto scaling node pool. All nodes in this cluster have private IP addresses only, and are not directly accessible from the internet. Ingress traffic will come through an external load balancer that does have public IP addresses, but the nodes that make up the Kubernetes cluster do not have Internet routable addresses attached or forwarded to them. As new services are deployed or load increases, Google Cloud is managing adding (and removing) nodes from this cluster. And, because they are all preemptible instances, we are getting a 90% cost savings AND Google is implementing some simple chaos engineering principles for us. Each node will disappear every day or more frequently.

There’s an

ingress controller for Argo Tunnel deployed to the cluster in the default namespace. We used

Replicated Kots and added a

Kustomize layer to deploy this Helm chart from our CI process. The Kustomizations contain the cert for the domain we are using for these developer tools.

Connecting And Accessing

For this example, I’ll show how we deploy Sourcegraph to our Kubernetes cluster, from their Helm chart, while providing customizations to it including adding our Argo Ingress for connectivity.

Sourcegraph has a recommended deployment process of helm install, but I want to customize this tool to work in our environment. I edit the values.yaml file, and then run helm template to generate all of the Kubernetes YAML. Then, I create an overlay using Kustomize to make a couple of changes:

Sourcegraph listens on a nodeport. I want to change this to a ClusterIP

Add a new service of ingress type to route traffic to the new cluster ip.

When this new service is deployed, Cloudflare creates a load balancer with a DNS record named sourcegraph.

But how can we control access? Sourcegraph has a login screen to protect access, but I want to add a layer of security before here. I’d like to authenticate people before they get to the Sourcegraph login screen.

Adding in Cloudflare Access and creating an OIDC application in Okta now enables Cloudflare to authenticate on connection to the “VPN”. It’s technically a VPN, but there’s no vpn client on any of our workstations because it’s happening on the backend.

Access Services

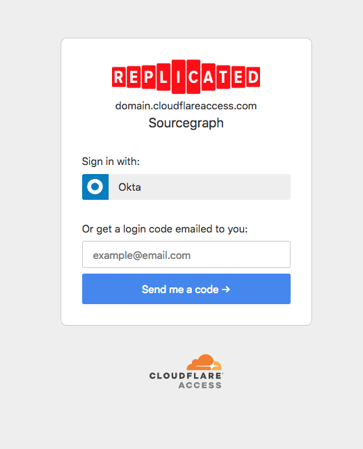

Connecting to our internal services couldn’t be easier on the new setup. My laptop doesn’t have a VPN client or anything installed, and I simply visit https://sourcegraph.<domain>.com. The first thing I’m presented with is a login screen on the domain <domain>.cloudflareaccess.com:

Choosing Okta takes me over to the Okta login screen, which will be familiar. I provide my credentials here and then the next screen I see is Sourcegraph. And because everything is authenticated with Okta, I’m already logged in:

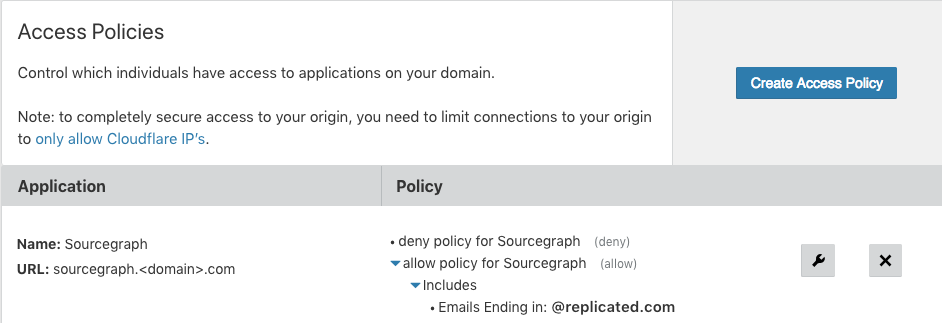

Rolling this out to the entire team of developers is pretty easy because everyone already has Okta credentials. There’s no new key management. From the average user of the applications point of view, the application is just a public application on the Internet that has a login. But it’s protected because I can control who can even access the origin in the Cloudflare access panel:

This is a simple policy, I’m just allowing everyone with an authenticated @replicated.com email address to access the tool. But for some other applications, including internal reporting tools, we limit access to paths based on the authenticated user.

Future

It would be great to figure out how to connect this into JWT tokens for internal APIs. Our internal admin tools create a JWT token and pass them to internal APIs. I’d love to put these internal APIs on an Argo tunnel and control access through Okta groups instead of internal tools.

Additionally, I’d like to be able to enforce higher levels of application patches and security when accessing certain applications. For example, Sourcegraph isn’t critical because it’s accessing GitHub. Our code isn’t public, but we don’t store keys and secrets or anything in there other than code. But our internal Admin system can contain lists of customers and have the ability to manage entitlements (the features that are enabled per customer). I’d like to require a higher bar to be met to access Admin than is necessary for Sourcegraph.